In Part 2 we've discussed SLAs. As an IT engineer I think of a 99.95% SLA (for a single instance) as a pretty good one. But you probably already know by now that I like to compare IT with the aerospace industry. As a passenger - would you be happy if say a flight computer in your jet was allowed to malfunction for ~21 minutes in a given month? I'd be scared. And our experience tells us that this is not the case in real world. So how do they achieve high availability in the aerospace industry?

Aerospace industry approach

I wanted to write about this for quite some time now. In fact, I've been thinking about it since May 2015 after reading a fascinating presentation by Peter Seiler and Bin Hu called "Design and Analysis of Safety Critical Systems". I reached out to Peter Seiler (Assistant Professor from the Department of Aerospace Engineering and Mechanics, University of Minnesota) and asked for permission to reuse some of the slides from this presentation. Thank you Peter!

Let's take Boeing 777-200 as an example (Boeing's first fly-by-wire aircraft). "Fly by wire" is defined by the Dictionary of Aeronautical Terms as:

Fly-by-wire (FBW) is a system that replaces the conventional manual flight controls of an aircraft with an electronic interface. The movements of flight controls are converted to electronic signals transmitted by wires (hence the fly-by-wire term), and flight control computers determine how to move the actuators at each control surface to provide the ordered response. The fly-by-wire system also allows automatic signals sent by the aircraft's computers to perform functions without the pilot's input, as in systems that automatically help stabilize the aircraft, or prevent unsafe operation of the aircraft outside of its performance envelope.

To put it simply - pilots (their controls) are no longer directly connected to the control surfaces (ailerons, rudder etc). Instead pilot actions are sent to the computers, which then "move" the control surfaces accordingly. This arrangement obviously makes these flight control computers critical to the overall safety of the aircraft.

Modern flight control systems are very complex but at their heart they have a simple classic feedback loop:

Simple indeed. But with just a single flight computer we will probably be only achieving availability similar to the cloud instances above - far cry from the required reliability targets.

What can we do to increase reliability? Based on the information from Part 1, we know that we can add redundant components to improve the fault tolerance of the system.

In IT world if we add one extra server we can have a 2-node cluster (with the active/active or active/passive arrangements). If we add more nodes then it can get more complicated. One of the approaches is the Majority Node Set. The triple modular redundancy approach is very common in the aerospace industry. By having 3 redundant components we arrive to the classic "Triplex" architecture:

Instead of one we have 3 independent components for each critical subsystem. These components are involved in the voting process to work out the correct result/action.

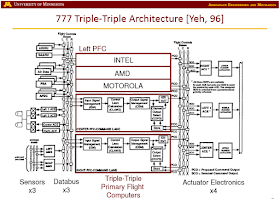

But even this is not enough and aerospace engineers go further. The 1996 "Triple-Triple Redundant 777 Primary Flight Computer" paper by Y.C. (Bob) Yeh describes 5 principles of Boeing's 777 FBW (fly by wire) design philosophy/design constraints related to safety:

- Common Mode/Common Area Faults

- Separation of FBW Components

- FBW Functional Separation

- Dissimilarity

- FBW Effect on Structure

This is where we see the triple modular redundancy evolving into the triple-triple architecture. We have 3 similar/identical channels (left, centre, right)...

For obvious reasons physical electrical wires for different channels will be located as far from each other as possible (to satisfy the second "separation of components" principle).

... and 3 dissimilar lanes in each channel (one in command, the other 2 functioning as monitors).

I was fascinated by the dissimilarity principle. There were many methods used in 777 architecture to satisfy this principle but the IT engineer in me was really impressed by this particular approach:

Dissimilar Microprocessor and Compilers (with Common software)

Or quoting [Yeh, 96]:

The microprocessors are considered to be the most complex hardware devices. The INTEL 80486, Motorola 68040 and AMD 29050 microprocessors were selected for the PFCs (Primary Flight Computers - DK). The dissimilar microprocessors lead to dissimilar interface hardware circuitries and dissimilar ADA compilers.

How cool is that?!!!

Intel 80486 (that powered PCs around the world in the 90s), 68040 (Macintosh Quadra 700 anyone?)... ah, memories!

So the designers selected 3 different CPU architectures. This means 3 different versions of machine code. So we need 3 different ADA compilers for each platform to provide triple dissimilarity. Wow... What an incredible level of assurance this approach provides!

When I was reading about this approach I was also contemplating an idea of having 3 independent groups of software developers implementing the same project requirements to avoid (or at least reduce the probability of) producing the same bugs...

Anyway, I hope you enjoyed this overview. While researching this topic I have certainly felt a lot of respect to aerospace designers, architects, and engineers. They produce highly reliable systems that make it safe for all of us to fly. And we (IT people) can certainly learn a few tricks there (especially in the mission critical systems).